PhotoScene:

Photorealistic Material and Lighting Transfer for Indoor Scenes

CVPR 2022

- Yu-Ying Yeh UCSD

- Zhengqin Li UCSD and Meta

- Yannick Hold-Geoffroy Adobe Research

- Rui Zhu UCSD

- Zexiang Xu Adobe Research

- Miloš Hašan Adobe Research

- Kalyan Sunkavalli Adobe Research

- Manmohan Chandraker UCSD

Abstract

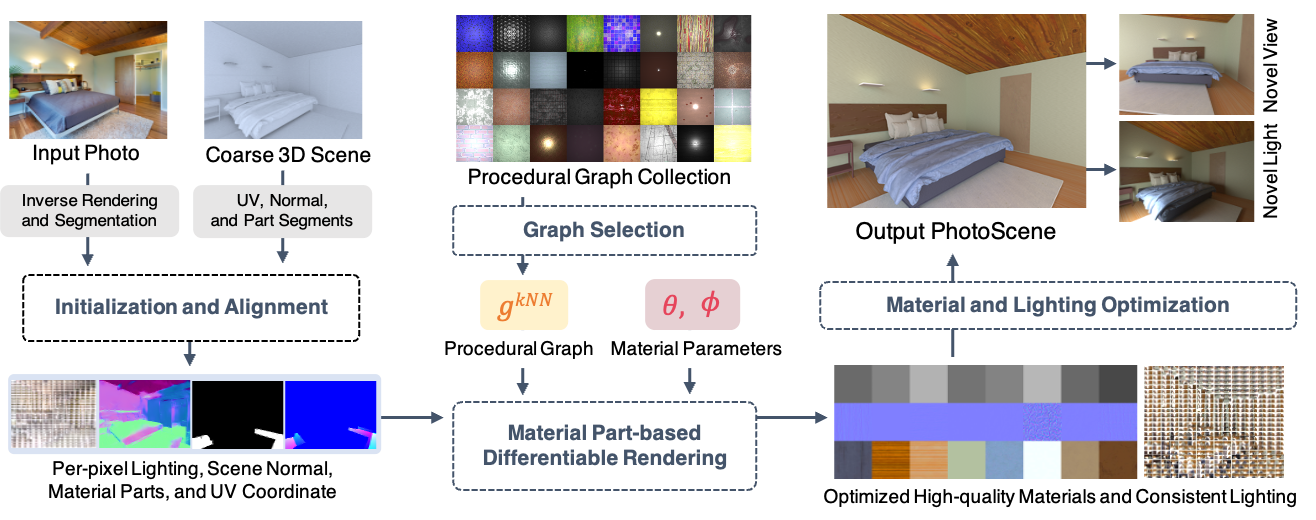

Most indoor 3D scene reconstruction methods focus on recovering 3D geometry and scene layout. In this work, we go beyond this to propose PhotoScene, a framework that takes input image(s) of a scene along with approximately aligned CAD geometry (either reconstructed automatically or manually specified) and builds a photorealistic digital twin with high-quality materials and similar lighting. We model scene materials using procedural material graphs; such graphs represent photorealistic and resolution-independent materials. We optimize the parameters of these graphs and their texture scale and rotation, as well as the scene lighting to best match the input image via a differentiable rendering layer. We evaluate our technique on objects and layout reconstructions from ScanNet, SUN RGB-D and stock photographs, and demonstrate that our method reconstructs high-quality, fully relightable 3D scenes that can be re-rendered under arbitrary viewpoints, zooms and lighting.

Results

Transfer from ScanNet (Multi-view) to OpenRooms (CAD model)

OpenRooms provides aligned CAD models and layout w.r.t each ScanNet scene. Each material part is optimized w.r.t. the optimal view.

ScanNet

PhotoScene

Novel Light

ScanNet

PhotoScene

Novel Light

Transfer from stock image (Single-view) to manually-aligned objects (CAD model)

CAD models and layout are aligned manually to a single image.

Image

PhotoScene

Novel Light

Image

PhotoScene

Novel Light

Transfer from SUN RGB-D (Single-view) to Total3D (rough 3D model)

Rough meshes and layout are reconstructed by Total3D. MaskFormer is used to obtain the panoptic labels which enables automatic transfer.

Image

PhotoScene

Novel Light

Image

PhotoScene

Novel Light

BibTeX

@InProceedings{Yeh_2022_CVPR,

author = {Yeh, Yu-Ying and Li, Zhengqin and Hold-Geoffroy, Yannick and Zhu, Rui and Xu, Zexiang and Ha\v{s}an, Milo\v{s} and Sunkavalli, Kalyan and Chandraker, Manmohan},

title = {PhotoScene: Photorealistic Material and Lighting Transfer for Indoor Scenes},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {18562-18571}

}